Human Pose Validation with TensorFlow.js for Virtual Try-On Applications

Introduction

In today’s e-commerce landscape, virtual try-on technology is reshaping the way users shop for clothing online. Allowing customers to see how clothing fits before purchasing not only enhances the shopping experience but also reduces return rates due to improper sizing. But to make virtual try-ons effective, it’s essential that the photos users upload meet certain quality standards—both in terms of image clarity and pose accuracy.

This article will explore how human pose validation, implemented with TensorFlow.js, can ensure that user-submitted photos provide optimal input for virtual try-on plugins. By validating poses on the client side, we can filter out substandard photos in real-time, providing immediate feedback to users before the images are processed on the backend. This approach enhances accuracy and user satisfaction in virtual fitting solutions.

Overview

TensorFlow.js, a JavaScript library for machine learning, enables efficient real-time human pose detection on the client side. Here’s a breakdown of the validation tasks and how TensorFlow.js supports each:

- Detecting a Person in the Image:

TensorFlow.js allows us to run human detection algorithms to confirm that the uploaded image contains a human subject. This detection filters out irrelevant images and serves as the basis for further pose validation. - Applying Validation Rules Using Detection Data:

Once a human pose is detected, we use pose data to apply specific validation rules, ensuring that the image meets quality standards. Key validation checks include:- Single Person Detection: The app must ensure only one individual appears in the image to avoid sizing errors. TensorFlow.js enables us to validate this by identifying the number of distinct people in the frame.

- Full Body in Frame: For an accurate virtual try-on, the plugin requires the entire body to be visible. Using TensorFlow.js, we confirm that all critical body parts (e.g., head, shoulders, knees, and feet) are within the frame and visible.

- Pose Simplicity: Complex poses can interfere with accurate garment overlay and size calculations. By detecting a neutral standing posture, we can filter out images where the user is in an unusual or dynamic position. This also includes ensuring that users follow the instructed poses: front-facing and side-view.

Implementation

1. Setup the React Environment

First, if you don't have a React project set up, create a new one:

yarn create vite tensorflow-react --template react

cd tensorflow-react

yarn

yarn dev

2. Upload & Preview image

import { useRef, useState } from 'react';

function App() {

const [image, setImage] = useState(null);

const [error, setError] = useState(null);

const imageRef = useRef(null); // Create a ref as an input to posenet processing

const handleFileChange = (event) => {

setError(null);

const file = event.target.files?.[0];

if (file && file.type.startsWith('image/')) {

const reader = new FileReader();

reader.onload = (e) => {

setImage(e.target?.result);

};

reader.readAsDataURL(file);

} else {

setError('Please upload a valid image file.');

}

};

return (

<div>

<div>

<input

type='file'

onChange={handleFileChange}

accept='image/*'

aria-label='Upload image'

/>

</div>

{error && <p style={{ color: 'red' }}>{error}</p>}

{image && (

<div style={{ position: 'relative' }}>

<img

ref={imageRef}

src={image}

alt='Uploaded preview'

width={300}

height={400}

/>

</div>

)}

</div>

);

}

export default App;

3. Install & Extract Pose From Image

You'll need to install @tensorflow/tfjs for TensorFlow.js, and @tensorflow-models/posenet for the PoseNet model.

Run the following commands in your React project directory:

yarn add @tensorflow/tfjs @tensorflow-models/posenet

Use posenet to load image and extract pose from image by using esstimateMultiplePoses method. This method is specifically designed to detect multiple people in an image or video and estimate their body poses simultaneously

async function extractPoseFromImage(imgElement) {

const net = await posenet.load();

const poses = await net.estimateMultiplePoses(imgElement, {

flipHorizontal: false,

});

const exactPoses = poses.filter((item) => item.score > 0.5); // to remove ambiguous data

return exactPoses;

}

Add function above to onLoad image

<img

ref={imageRef}

src={image}

alt='Uploaded preview'

width={300}

height={400}

onLoad={async () => {

const poses = await extractPoseFromImage(imageRef.current);

const pose = poses[0];

setHumanDetected(pose); // set to the state humanDetected

}}

/>

The returned data will be an array of poses, if there are no people in the image it will return an empty array, if there are people in the image poses is an array like this:

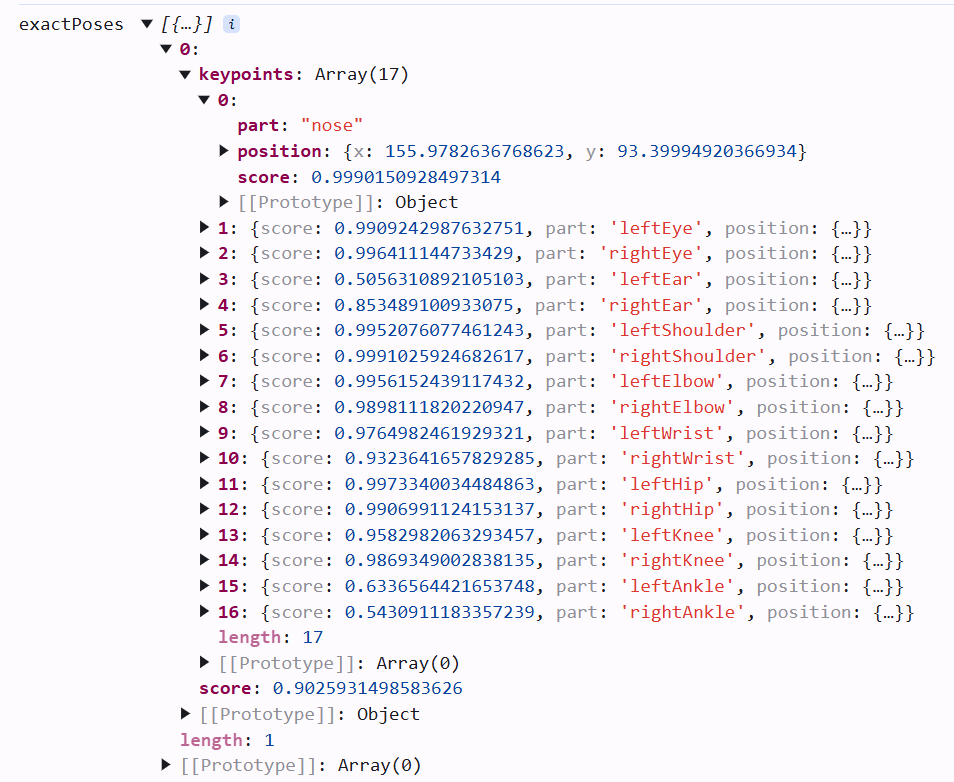

Each object in the poses array above is a person appearing in the image. Each detected object shows accuracy through the score property and keypoints are 17 body parts detected we also have the accuracy of the parts and their positions. Now we can use this data to validate all of rules like we discussed above

- Detecting a Person in the Image:

If length of the poses array is zeros, the image is invalid - Applying Validation Rules Using Detection Data:

- Single Person Detection: If length of the poses array is greater than 1, the image is invalid

- Full Body in Frame: If some important keypoints in frame have score less than 0.5, the image is invalid. This is the code to check whole body in the frame:

function isWholeBodyDetected(keypoints) {

const keypointsNeeded = [

'leftElbow',

'rightElbow',

'leftWrist',

'rightWrist',

'rightHip',

'leftHip',

'rightKnee',

'leftKnee',

'nose',

'leftAnkle',

'rightAnkle',

'leftShoulder',

'rightShoulder',

];

return keypointsNeeded.every((point) =>

keypoints.find((kp) => kp.part === point && kp.score > 0.5),

);

}

- Standing posture: This is the most difficult validation case, we need to combine many conditions together such as comparing the top and bottom positions of the parts along the y-axis and the positions of the parts from left to right along the x-axis.

- Get information from points, nose, shoulder, elbow, wrist, hip, knee, ankle.

- Take the distance from the nose to the shoulder of both sides and compare. Whichever side is wider, take that distance and multiply by 1.5. Temporarily set it as R

- Check that the elbow points are within the range shoulder-R and shoulder+R, and the same goes for the other points. (In case the limbs are too spread out or the posture of the limbs is incorrect...)

- Check that the points are in the correct position. Shoulder is above elbow, elbow is above wrist... (In case of raising both arms high or sitting with legs crossed, bent...)

- Check the distance between the 2 shoulders must be greater than or = 50 pixels. (In case of turning the body)

- Check that the 2 knees are wider than the hip width -10 pixels. (In case of 2 legs standing close together)

Implementation Challenges

While TensorFlow.js provides robust pose detection, there are specific challenges in implementing a smooth and reliable validation process.

- Resolution and Aspect Ratio:

The plugin requires high-resolution images for accurate processing, but defining the exact resolution input can be challenging. Instead of enforcing strict pixel dimensions (e.g., 768px x 1024px), using an aspect ratio allows flexibility across different devices. By requiring images to meet a relative width-to-height ratio, we can maintain quality without restricting device compatibility. - Handling Incomplete Pose Data:

TensorFlow.js can sometimes return high confidence scores even if certain body parts are out of frame, making it difficult to identify incomplete images accurately. Custom logic is essential here to account for missing parts despite high confidence scores, ensuring that images with cropped limbs or cut-off body parts are flagged for resubmission. - Creating a Flexible Validation Formula:

There is no perfect formula for pose validation that fits every possible scenario. For example, requirements may vary for front-facing versus side-view poses. To address this, custom rules and thresholds are defined for each specific use case. This allows us to fine-tune the formula over time, adapting validation rules as more user data and feedback become available.

Conclusion

Human pose validation is essential in virtual try-on applications, where accurate and high-quality images ensure better clothing fit predictions and improve user experience. By leveraging TensorFlow.js, we can efficiently implement client-side validation that filters out unsuitable images, enhancing the overall accuracy and effectiveness of virtual try-ons. Although challenges such as resolution flexibility and imperfect pose detection exist, custom rules and ongoing improvements make this approach viable for real-world e-commerce applications.

As this technology evolves, fine-tuning validation models and incorporating more sophisticated algorithms will only improve accuracy and applicability. With robust human pose validation, virtual try-ons are poised to become a standard, transforming the online clothing shopping experience.

Comments ()